Anthology of conversations about AI past and future

A handy reference to all the great interviews you might have missed

As I’ve said in this blog a number of times before, I write to learn. Another mode of learning that really works for me is talking things through with other people, particularly folks who think deeply and reason thoughtfully.

Over the Dec/Jan period, I’ve been fortunate to be able to nudge 15 of those people to put their musings into written form. What started as an idea for some lighter holiday reading turned into a veritable ‘15 days of Christmas’ as I got the anticipatory delight of clicking into a newly filled Google Doc and reading everything they said! It really did feel like I was getting a sneak peak preview of something I then had the pleasure of sharing with the world. This will definitely be a repeated feature at some point.

While many of you have shared with me that you LOVED the holiday reading, I know other folks have been away from their work emails (good on you!) and have missed a fair chunk of the series. Voila! All 15 (plus a bonus where I interview myself) collected below.

"A justification for AI spending is that it will help us with hard things. So let’s try to work on hard things."

Mark Moloney is a still-hands-on-keyboard engineer who can also be persuaded to write in English. Where others talk, Mark builds; which makes his perspective both grounded and pragmatic.

"My biggest fear is that we fail to act ethically in pursuit of profit, that we will continue to do things we know are wrong in the name of innovation."

Kate Bower is a leading consumer and digital rights advocate dedicated to ensuring fair and safe AI and comprehensive privacy protections for Australian consumers.

"Medicine needs AI. The needs of an aging population with chronic diseases are challenging the system."

An Associate Professor at the University of Melbourne, Helen Frazer is a radiologist, breast cancer clinician and AI researcher with over 25 years’ experience leading breast cancer screening services.

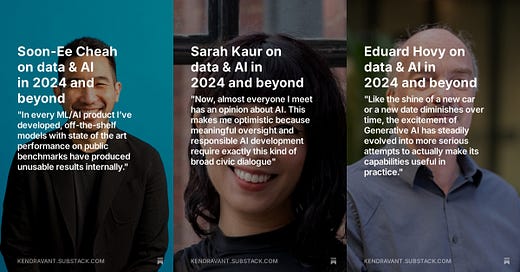

"In every ML/AI product I’ve developed, off-the-shelf models with state of the art performance on public benchmarks have produced unusable results internally."

Soon-Ee Cheah originally trained as a pharmacist. Now he eats complicated AI research papers for breakfast and communicates terribly complicated mathematical concepts in a lucid, understandable and business focussed way that is unparalleled.

"We have to take LLMs and all the other useful tools we’ve come up with over the last 40 years and apply them to usefully and cost effectively solve specific important problems."

Borrowing a description from a dear colleague on LinkedIn recently, Kendra is a true triple-threat of real-world commercial experience, deep intellect, and huge generosity of spirit and human curiosity.

"I’d like to point out there’s no way to stay on top of everything, and trying to do that will only drive you mad."

Sami Mäkeläinen is a strategic foresight professional. His work centres around teaching mindful application of generative AI and other emerging technologies, their societal impact, and role in building resilient systems and facilitating capability uplift for organisations.

"AI only changes the world when skilled people use it to build safe, useful products."

Tracy Moore has been using data & AI to drive commercial success in top line customer growth and bottom line profitability for more than 15 years. She is also a wickedly good strategist and storyteller.

"Nothing beats looking at the data directly. I don’t expect that this aspect of AI will ever go away: to a first-order approximation, the model is the data."

Edward Kim is currently building things at Cohere, when he isn’t at the University of Toronto where he serves as an adjunct research professor at the intersection of materials informatics and LLMs! A first class brain inside of a first class human.

"Many clients I work with assume that a proof of concept (POC) can seamlessly transition into production and yield immediate growth. The reality is much more complex!"

Slav Tabachnik is the National Generative AI Transformation Leader in the Deloitte Australia Transformation Office. His remit includes “enabling resilient and innovative transformation” across the consulting practice, anticipating and harnessing Gen AI based disruption before it comes knocking.

"The push for nuclear power to feed the seemingly insatiable AI hunger is also an interesting advancement that will shape global geopolitics long term"

Kobi Leins has experience in digital ethics, disarmament and humans rights and acts as a technical expert for Standards Australia. Intriguingly she loves both dachshunds and container ships!

"I am hopeful that as we continue to ask these big questions about who we are, why we do what we do, and how we best work with each other, that AI could really be used for good as part of the answer."

James Bergin has an ability to pull out a framework, whip up a genuinely informative metaphor and test the clarity and logic of a still forming idea with gentleness and rigour at the same time that is unrivalled.

"We are dealing with new technologies: it’s still very unclear how much value they will create and—crucially—who will manage to capture that value."

Andrew John is a professor of economics who teaches macroeconomics and managerial ethics/ethical leadership in the Business School MBA and Executive Programs.

"Both the technology and regulatory environment have rapidly evolved. What hasn’t changed is human nature - if orgs want to get the benefits that AI promises, they need to understand their people"

Myfanwy Wallwork currently spends her days deep in the practicalities of how organisation can actually implement useful AI strategies and governance processes.

"We should look at models like LLMs as the backbone piece of a much bigger puzzle."

Daniel Beck a senior lecturer at RMIT University in Melbourne loves listening to other people’s problems and figuring out how we can leverage NLP, ML and AI to help solve them.

"Now, almost everyone I meet has an opinion about AI. This makes me optimistic because meaningful oversight and responsible AI development require exactly this kind of broad civic dialogue"

Sarah Kaur is a researcher and practitioner of human centred design with a specific focus on Responsible AI, and embedding human insight in machine learning and AI research and product development.

"Like the shine of a new car or a new date diminishes over time, the excitement of Generative AI has steadily evolved into more serious attempts to actually make its capabilities useful in practice."

Eduard Hovy is the Executive Director of Melbourne Connect, a Professor in the University of Melbourne’s School of Computing and Information Systems and a faculty member at CMU’s Language Technology Institute.